With the release of RavenDB 6.0, we are now starting to focus on smaller features. The first one out of the gate, part of RavenDB 6.0.1 release, is actually a set of enhancements around making backups faster, smaller and cheaper.

I just checked, and the core backup behavior of RavenDB hasn't changed much since 2010(!). In other words, decisions that were made almost 14 years ago are still in effect. There have been a… number of changes in both RavenDB, its operating environment and the size of the database that we deal with.

In the past year, we ran into a number of cases where people working with datasets in the high hundreds of GB to low TB range had issues with backups. In particular, with the duration of backups. After the 6.0 release, we had the capacity to do a lot more about this, so we took a look.

On first impression, you would expect that backing up a database whose size exceeds 750GB will take… a while. And indeed, it does. The question is, why? It’s a lot of data, sure. But where does the time go?

The format of RavenDB backups is really simple. It is just a GZipped JSON file. The contents are treated as a JSON stream that contains all the data in the database. This has a number of advantages, the file size is small, the format itself lends itself well to extension, it is streamable, etc. In fact, it is a testament to the early design decision that we haven’t really had to touch that in so long.

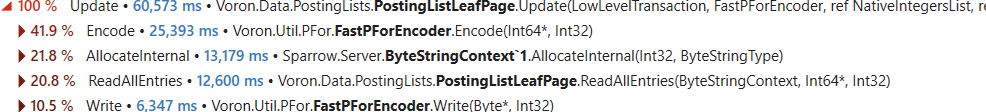

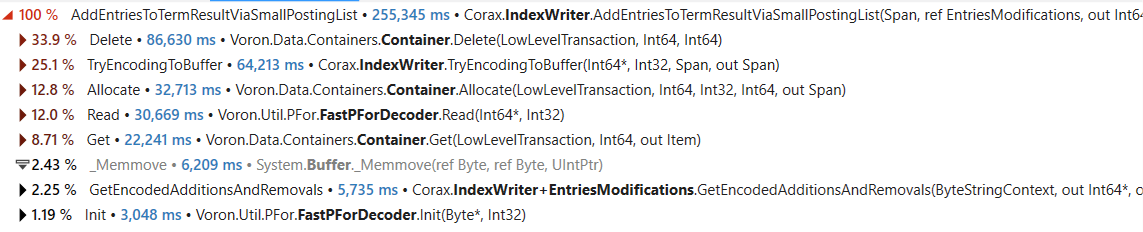

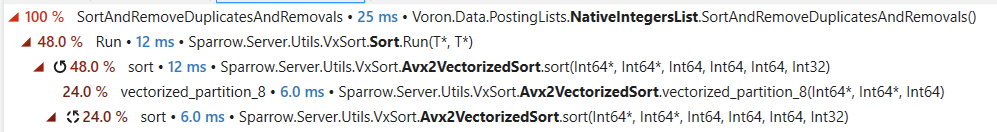

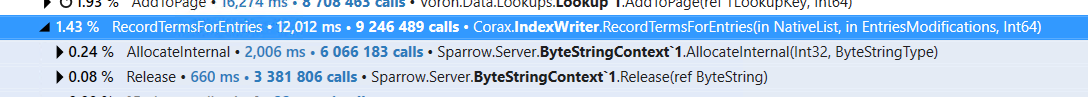

Given that the format is stable, and that we have a lot of experience with producing JSON, we approach the task of optimizing the backups with a good idea where we should go. The problem is likely with I/O (we need to go through the entire database, after all). There were some (pretty wild) ideas flying around on how to address this, but the first thing to do, of course, was to run it under the profiler.

The results, as you can imagine, were not what we expected. It turns out that we spend quite a lot of the time inside of GZip, compressing the data. It turns out that when we set up the backup format all those years ago, we chose GZip and Optimal compression mode. In other words, we wanted the file size to be as small as possible. That… makes sense, of course. But it turns out that the vast majority of the time is actually spent compressing the data?

Time to start looking deeper into that. GZip is an old format (it came out in 1992!). And recently there have been a number of new compression algorithms (Zstd, Brotli, etc). We decided to look into those in detail. GZip also has several modes that affect compression ratio vs. compression time.

After a bit of experimentation, we have the following details when backing up a 35GB database.

|

Algorithm & Mode |

Size |

Time |

|

GZip - Optimal |

5.9 GB |

6 min, 40 sec |

|

GZip - Fastest |

6.6 GB |

4 min, 7 sec |

|

ZStd - Fastest |

4.1 GB |

3 min, 1 sec |

The data in this case is mostly textual (JSON), and it turns out that we can reduce the backup time by more than half while saving 30% in the space we take. Those are some nice numbers.

You’ll note that ZStd also has a mode that controls compression ratio vs compression time. We tried checking this as well on a different dataset (a snapshot of the actual database) with a size of 25.5GB and we got:

|

Algorithm & Mode |

Size |

Time |

|

ZStd - Fastest |

2.18 GB |

56 sec |

|

ZStd - Optimal |

1.98 GB |

1 min, 41 sec |

|

GZip - Optimal |

2.99 GB |

3 min, 50 sec |

As you can see, GZip isn’t going to get a participation trophy at this point, coming dead last for both size and time.

In short, RavenDB 6.0.1 will use the new ZStd compression algorithm for backups (and export files), and you can expect to have greatly reduced backup times as well as smaller backups overall.

This is now the default mode for RavenDB 6.0.1 or higher, but you can control that in the backup settings if you so wish.

Restoring from old backups is no issue, of course, but restoring a ZStd backup on an older version of RavenDB is not supported. You can configure RavenDB to use the GZip algorithm if that is required.

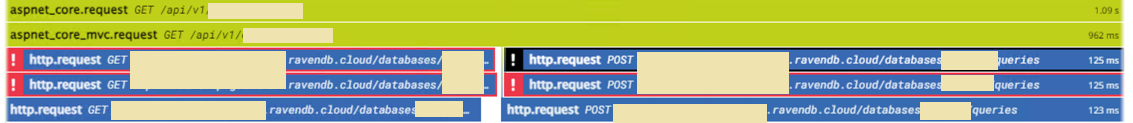

Another feature that is going to improve backup performance is the notion of backup mode, which you can see in the image above. RavenDB backups support multiple destinations, so you can back up to Amazon S3 as well as Azure Blob Storage as a single unit.

At the time of designing the backup system, that was a nice feature to have, since we assume that you’ll usually have a backup to a local disk (for quick restore) as well as an offsite backup for longer-term storage. In practice, almost all backup configurations in RavenDB have a single destination. However, because we have support for multiple backup destinations, the backup process will first write the backup file to the local disk and then upload it.

The new Direct Upload mode only supports a single destination, and it streams the data to the destination directly, without touching the disk. As a result of this change, we are using far less I/O during backup procedures as well as reducing the total time it takes to run the backup.

This is especially useful if your backup destination is nearby and the network is good. This is frequently the case in the cloud, where you are backing up to S3 in the same region. In our tests, it reduced the backup time by 30% in some cases.

From a coding perspective, those are not huge changes, but together they mean that backups in RavenDB are now cheaper, faster, and far smaller. That translates to a better operating environment for your system. It also means that the costs of storing backups are going to go down by a significant amount.

You can read all the technical details about the few features in the feature announcements: