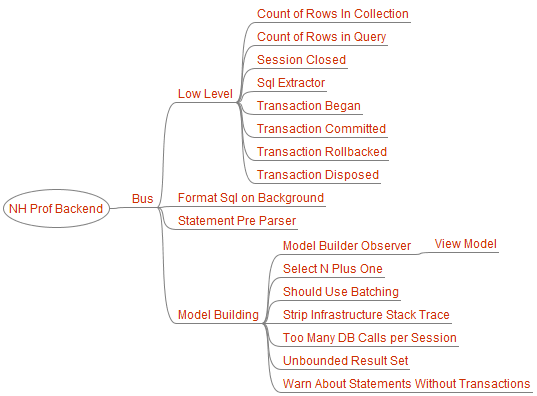

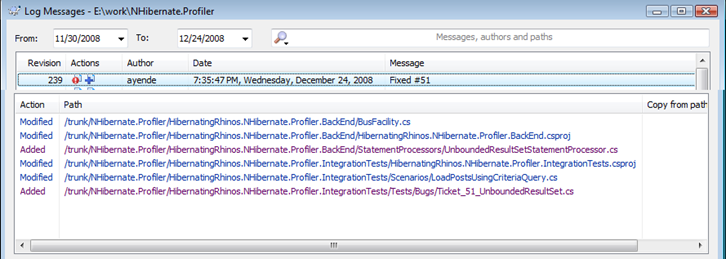

![]() So, I talked a bit about the architecture and the actual feature, but let us see how I have actually build & implemented this feature.

So, I talked a bit about the architecture and the actual feature, but let us see how I have actually build & implemented this feature.

This is the actual code that goes into the actual product, I want to point out. And this is actually one of the more complex ones, because of the possible state changes.

public class UnboundedResultSetStatementProcessor : IStatementProcessor { public void BeforeAttachingToSession(SessionInformation sessionInformation, FormattedStatement statement) { } public void AfterAttachingToSession(SessionInformation sessionInformation, FormattedStatement statement, OnNewAction newAction) { if(statement.CountOfRows!=null) { CheckStatementForUnboundedResultSet(statement, newAction); return; } bool addedAction = false; statement.ValuesRefreshed += () => { if(addedAction) return; addedAction = CheckStatementForUnboundedResultSet(statement, newAction); }; } public bool CheckStatementForUnboundedResultSet(FormattedStatement statement, OnNewAction newAction) { if (statement.CountOfRows == null) return false; // we are discounting statements returning 1 or 0 results because // those are likely to be queries on either PK or unique values if (statement.CountOfRows < 2) return false; // we don't check for select statement here, because only selects have row count var limitKeywords = new[] { "top", "limit", "offset" }; foreach (var limitKeyword in limitKeywords) { //why doesn't the CLR have Contains() that takes StringComparison ?? if (statement.RawSql.IndexOf(limitKeyword, StringComparison.InvariantCultureIgnoreCase) != -1) return true; } newAction(new ActionInformation { Severity = Severity.Suggestion, Title = "Unbounded result set" }); return true; } public void ProcessTransactionStatement(TransactionMessageBase tx) { } }

And now the test:

[TestFixture] public class Ticket_51_UnboundedResultSet : IntegrationTestBase { [Test] public void Will_issue_alert_for_unbounded_result_sets() { ExecuteScenarioInDifferentAppDomain<LoadPostsUsingCriteriaQuery>(); var statement = observer.Model.RecentStatements.Statements .OfType<StatementModel>() .First(); Assert.AreEqual(1, statement.Actions.Count); Assert.AreEqual("Unbounded result set", statement.Actions[0].Title); } }

And, just for fun, the scenario that we are testing:

public class LoadPostsUsingCriteriaQuery : IScenario { public void Execute(ISessionFactory factory) { using (var session = factory.OpenSession()) using (var tx = session.BeginTransaction()) { session.CreateCriteria(typeof(Post)) .List(); tx.Commit(); } } }

And this is it. All you have to do to implement a new feature. This make building the application much easier, because at each point in time, we have to deal with only one thing. It is the aggregation of everything put together that is actually of value.

Also, notice that I heavily optimized my workflow for tests and scenarios. I can write just what I want to happen, not caring about how this is actually happening. Optimizing the ease of test is another architectural concern that I consider very important. If we don't deal with that, the tests would be a PITA to write, so they would either wouldn't get written, or we would get tests that are hard to read.

Also, notice that this is a full integration tests, we execute the entire backend, and we test the actual view model that the UI is going to display. I could have tested this using standard unit testing, but in this case, I chose to see how everything works from start to finish.