In the series of posts so far, when discussing reads, I punted the part where we know what to read. I mentioned that we get a whole batch of post ids from some where and discussed how that is going to work, but that was it. Now I want to talk exactly on how this works.

The timeline concept is a fairly simple one. We have a list of posts ids that the user goes through. As they are browsing through the list, items are added at the top, etc. This is basically the Twitter model. Another alternative is that as you scroll, if there are new items in the list, you are shown them before older values (the Facebook approach), but that is more complex.

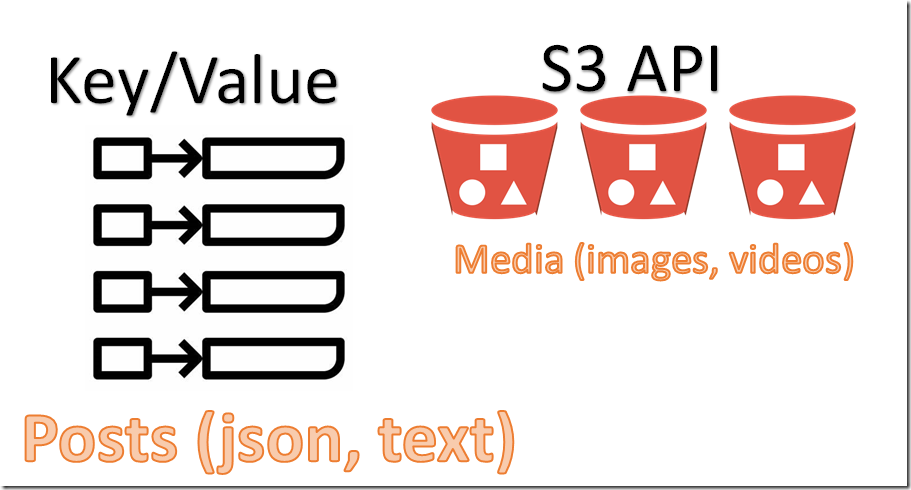

Conceptually, the timeline is as simple as:

In other words, we just have a list of post ids, we add items at the end and as we scroll we keep track of where we started. That is sufficient to get us pretty much all the features that we want, surprisingly enough.

When you go to the home page and look at your timeline, you’ll typically start with whatever the latest value there, we’ll record the last position we saw and then start scrolling backward in time. In other words, if we have 10,000 items in the timeline, we’ll record that the position we started at was 10,000 and then start going back toward zero. If there are new items, the size of the list will increase and we can jump back to the top, etc.

That is simple enough, but how does this actually help us? That may be good if I wanted to see the public timeline of the entire network, but what about the actual features. I don’t care that a restraint in Prague is now offering discounted deliveries, for example. I care about the accounts that I follow.

The idea is that we don’t have just a single such timeline, but many. In fact, pretty much all operations in the social platform can be represented using the timeline abstraction.

Let’s consider typical usage of an account. I’m adding posts, but I also want to be able to see people talking to me or about tags that I follow. How is that going to work?

Well, I’m actually going to have two timelines:

- Public Timeline – where we’ll add all the posts from the user, and maybe posts that mention / reply, etc.

- Private Timeline – where we’ll add posts from users that you follow, mentions, replies to discussion the user took part of, etc.

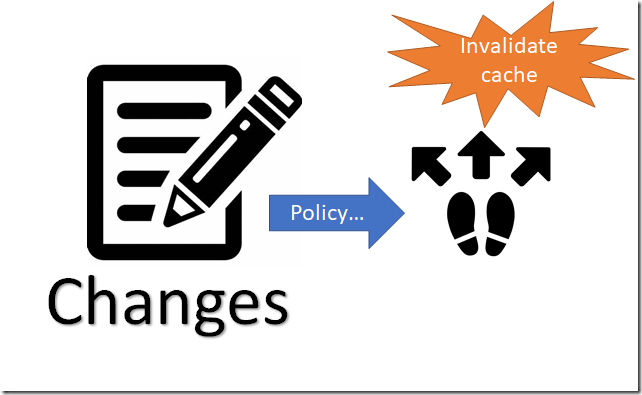

In both cases, the behavior of the system is identical. We simply go through the list. If you’ll recall, I left a lot unsaid when I discussed writing posts. In particular, how do I publish them to interested parties. This is where we start to apply policies. Part of the process of adding a post is to figure out what timelines it should go to.

By moving most of the cost to the write side, we drastically reduce the overall complexity. Furthermore, it also make a lot more sense, given that most posts aren’t going to have a wide blast radius.

When posting a message, we need to consider the following:

- Is this a high impact account? (Let’s say, > 50,000 followers) If so, we’ll have special behaviors.

- Who is following this user?

- Is this a reply to another post?

- Are there mentions on this post? If so, need to apply the logic based on the mention policies.

As you can imagine, this is quite involved, but in general, the way it will work is something like this:

The key here is the whole manner in which this works is done via selecting what we’ll publish to. Further more, you can see that a user have multiple timelines, and in the case of a mention, we can apply additional policies to see how the post gets routed. This is complex and often changing, but it also happens a lot less often than reads. So it is a net benefit to move all the costs to the write side.

Another thing to notice in this case is how we handle a reply. A reply it just appended to the timeline of the post. In other words, it is timelines all the way down. We want to have a single simple abstraction to handle as much of the system as we want. In this case, we need to handle replies on a post and that can be anything from very few to hundreds of thousands. By generating a timeline for the post as well, we can reuse all the same behaviors and it just works.

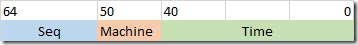

As for the timeline itself? It is merely a queue of post ids, and it allows you to set a position in it in an efficient manner. The list of post ids above is how it works conceptually, but we have to think about the numbers here. How big can a timeline get?

- The personal timeline of a user is limited to how many posts they can make. In general, even very heavy users will not top a few hundreds a day and low thousands a month. That means that we have a good reasonable upper bound to how big the personal timeline can grow. Ten years of posting 5,000 posts a month will get you over half a million, but that I would assume be the top rate for anything that isn’t an automated system.

- Your Public Timeline is impact by how many people you follow and how prolific they are. There is a natural limit to how many people an account can follow, so there is a bound here, but assuming that you follow 1000 accounts that all post 1000 posts a month, that adds up to a million posts a month. Over a ten year span, that would be 120 million posts. That said, we’ll discuss other properties of the public timeline below.

- Post’s timeline is all the replies that were made to the post. Most posts have very few replies, but some will garner a lot. It took me a minute to find a Tweet on Twitter that had close to 400,000 replies, for example.

So a timeline may be big, potentially very big. However, there is an interesting issue here, how much do we actually need to keep?

The purpose of the Public Timeline, for example, is to show you the front page of the site, how much data back do we need to keep? Is there a reason to keep your timeline from three years ago? The answer is probably no. We can keep the public timeline at a certain size and likely benefit from a lot of space savings. On the other hand, the replies for a post can be quite interesting, and while they can grow very big, it probably make sense to never trim them.

So we have the concept of a timeline, but what is it actually going to be?

In terms of REST API, we are going to have the following endpoints:

- GET /timelines?id=1351081943163123854

GET /timelines/sections/F4BE2048BF51F3DCC69EA4CA4ED08F12A36BD6524C9F12018BA0CE6F7C076BB2

In other words, we can access the timeline or a section in that timeline. I would rather show the output and then discuss what it all means. The first endpoint gives us the timeline itself, and looks like this:

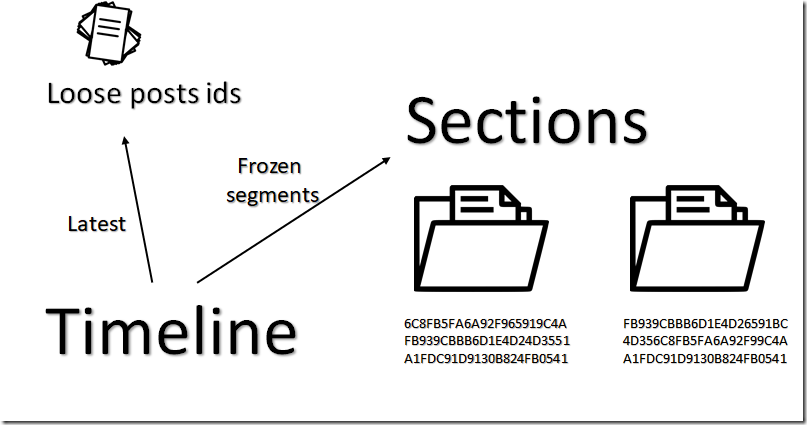

There are a few things to note here. When we ask for a timeline, we get the most recent posts in the timeline, as well as the past few sections. The way a timeline works, you can always append posts ids to it, which works great, except that at some point the sheer size that is involved is starting to be problematic.

If we consider a big timeline, one with 400,000 posts in it, that comes to about 3.2 MB used, just to store the post ids (8 bytes each). In practice, due to concurrency and distribution concerns, we can’t have an actual list of post ids, so we need some better management. Another factor is that you very rarely need or want to get the entire timeline, you want to start from the top and work your way down.

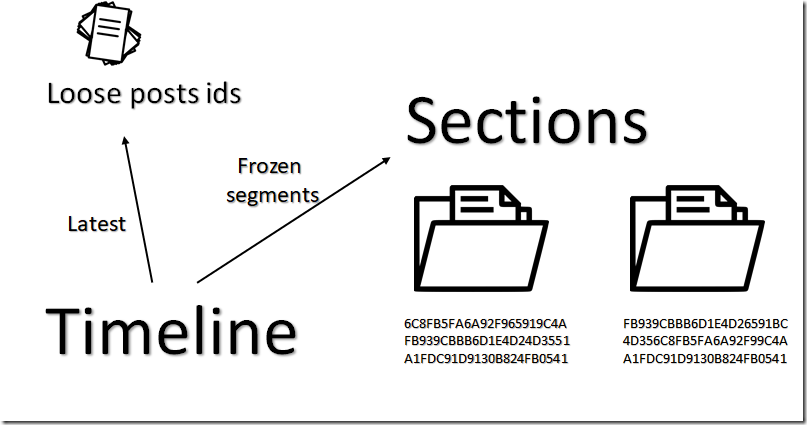

We can handle that easily enough using two stage approach. First, all the new post ids appended to a timeline are written in a “loose” form. Each one with each own entry. Once we hit a certain limit (128, for example), we know that this is likely to grow bigger. We can grab the loose post ids in the timeline and gather them into a section. A section is an independently addressed part of the timeline. The idea is that we gather all of the post ids currently loose in the timeline, write them into a single object and compress that. Then we use the hash of the resulting object to as the key to an object store.

Side note, timelines are immutable. Once the section is created, it cannot change. You can add additional filtering on the timeline on read, on the other hand. The timeline also should handle the case where posts in the timeline has been deleted, since we aren’t cannot modify it. For ease of implementation, we’ll also allow duplication in the posts ids. Clients are expected to handle and ignore duplicate post ids that happen within a certain time range.

The reason that the timeline section is compressed is to reduce the size, obviously. In my testing, I was able to get 65% reduction in size without taking any special efforts. Throwing the compressed data into object storage (S3 and the like) also means that it is much easier to scale reads on them. If we have a user who is very popular, we can move that timeline to a compression section faster to reduce load. This design explicitly acknowledge the problems with distributed systems and concurrency. It is possible that a compressed section will have an id that also appears in the loose portion of the timeline. The responsibility to handle such a scenario is on the client code, which is able to do so far more easily than the server side portion.

After compressing the loose posts in the timeline, we record the new section hash and allow clients to access it. It might be easier to see how that would work in the following image:

Given a post id size of 8 bytes, and assuming that we can compress it by 65% (my naïve tests using Brotli & GZip says yes, can probably do better than that) we can state that every thousand post ids or so we can generate a new section (meaning that it would be about 2KB in size, in the end). Even a very big timeline with hundreds of thousands of entries would end up with just a few hundreds of sections at the top.

The entire mechanism is very limited, quite intentionally. The external operations we allow on a timeline are append and get, with the client expected to understand the manner in which they are going to go deeper into the timeline. The limitation and expectation from the client (like allowing duplicate post ids, handling post ids that point to deleted posts, etc) are all there to make it easy to handle scaling out the system.

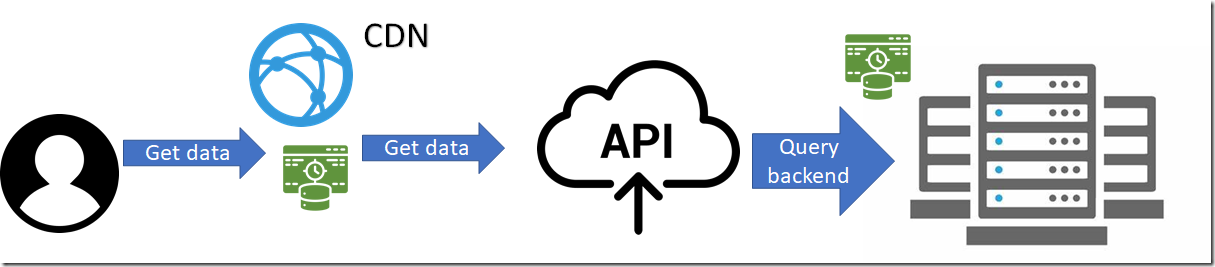

Consider a typical use case, I go into a popular account and look at their posts. Effectively, I’m browsing their public timeline. My interactions with the server goes like this:

- Get a list of the post ids in the timeline. The first step is: GET /timelines?id=1351081943163123854

- This gets me the list of loose post ids and the recent segments.

- Notice that this API call is open for caching as well, so we can get the scaling benefit of that as well.

- Get the actual posts, which I can do with the batch post read API that we discussed earlier.

In many cases, the cost of getting the timeline for the first time will be amortize over the reading time of many posts. The bulk read API gets me 128 posts at a time, so as the user is reading, I can get the next batch ready and give them the next part immediately.

Once I’m done with the loose posts, I can go into the compressed sections and do the same there. If each section has about 1000 posts ids, that will be sufficient for quite some time. And because I’m driving this from the client, it is very easy for me to scale. Throwing the data into object store like S3 means that I can get both CDN support easily and my scaling issue is now: “serve a lot of small files”, which is a very well understood problem.

I still have to take into account permissions, but that is already something that we handle in the batch read API. Notice that for the common case of public posts, pretty much the whole thing has caching and distribution baked and the amount of work that we can let the rest of the system handle is very high.

Hot spots in the system are going to be handled by the infrastructure, not by our own code, big machines or clever algorithms. They are going to be handled by the architecture of the system making it simple to manage them, giving plenty of room for caching and CDN to take charge and reduce our costs.

That is, after all, the whole point of this series of posts.