One of the most common themes I run into when talking to customers, users and sundry people in tech is the repeated desire to fire developers.

Actually, that is probably too loaded a statement. It actually come in two parts:

- Developers usually want to focus on the interesting bits, and the business logic portions aren’t that much fun.

- The business analysts usually want to get things done and having to get developers to do that is considered inefficient.

If only there was a tool, or a pattern, or a framework, or something that would allow the business analysts to directly specify the behavior of the system… Why, we could cut the developers from the process entirely! And speaking as a developer, that would be a huge relief.

I think the original name for that was CASE tools, and that flopped. In fact, literally every single one of the attempts to replace developers by a tool has flopped. They got such a bad rap that people keep trying to implement them using different names. Some stuff can be done fairly easily, though. WYSIWYG for GUI is well established and Wordpress and WIX, to name the two examples that come to mind immediately, show that you can have a non techie build a proper website. In fact, you can even plug in some pretty sophisticated functionality without burdening the user with too much.

But all that takes you to a point. And past that point, the drop off is harsh. Let’s take another common tool that is used to reduce the dependency on developers, SharePoint.

You pay close to double for actual developer time on SharePoint, mostly because it is so painful to work with it.

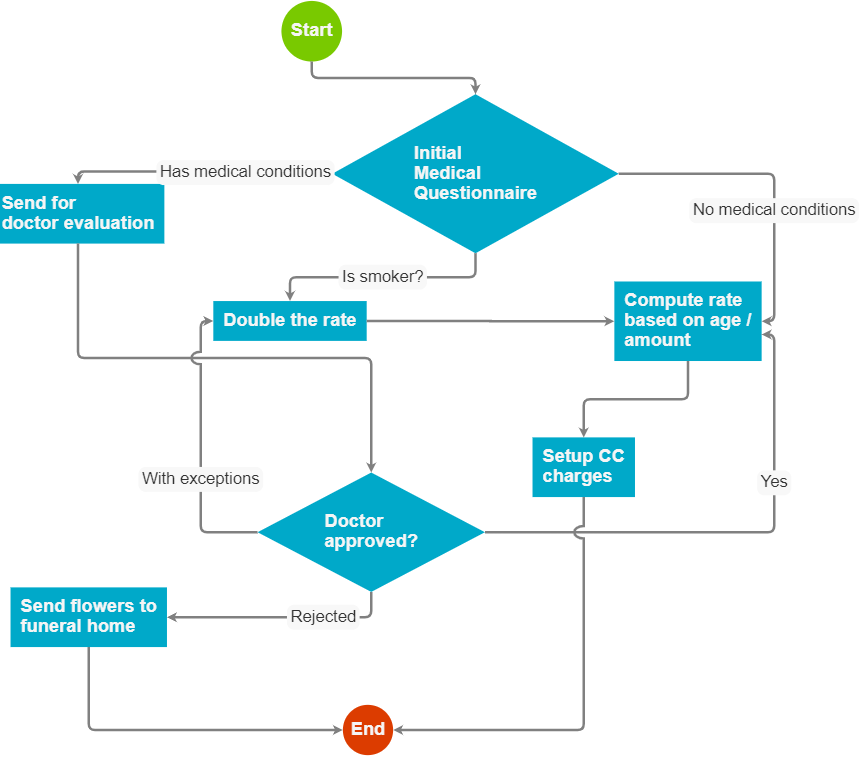

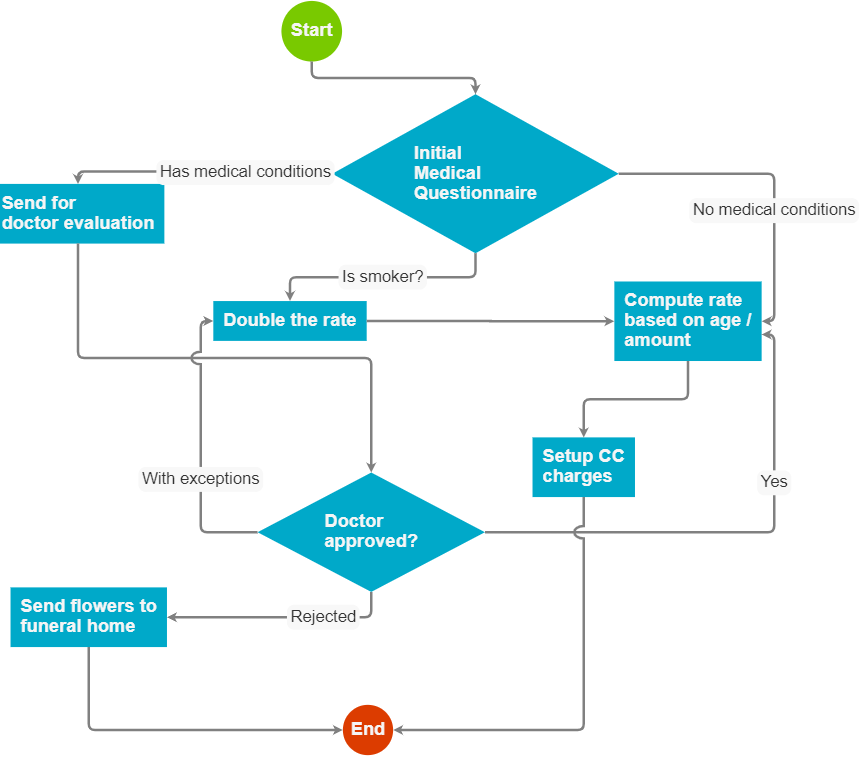

In a recent conference, I got into a conversation about business workflows and how to best implement them. You can look at the image on the right to get a good idea about what kind of process they were talking about.

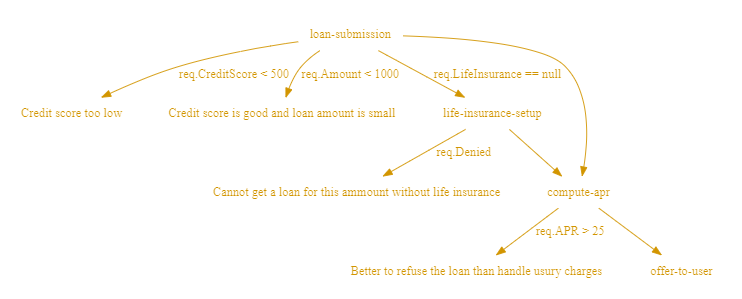

To make things real, I want to take a “simple” example, of accepting a life insurance policy. Here is what the (extremely simplified) workflow looks like for issuing a life insurance policy:

This looks good, and it certainly should make sense to a business analyst. However, even after I pretty much reduced the process to its bare bones and even those has been filed away, this is still pretty complex. The process of actually getting a policy is actually a lot more complex. Some questions don’t require doctor evaluation (for example, smoking) and some require supplemental documentation (oh, you were hospitalized? Gimme all these records). The doctor may recommend different rates, rejecting entirely, some exceptions in the policy, etc. All of which need to be in the workflow. Actuarial tables needs to be consulted for each of those cases, etc, etc, etc.

But something like the diagram above isn’t going to be able to handle this level of complexity. You are going to get lost very quickly if you try to put so many boxes on the screen.

So you need encapsulation.

And you’ll probably want to have a way to develop these business workflows, which means that they aren’t static.

So you need source control.

And if you have a complex business process, you likely have different people working on it.

So you need to be able to review changes, and merge them.

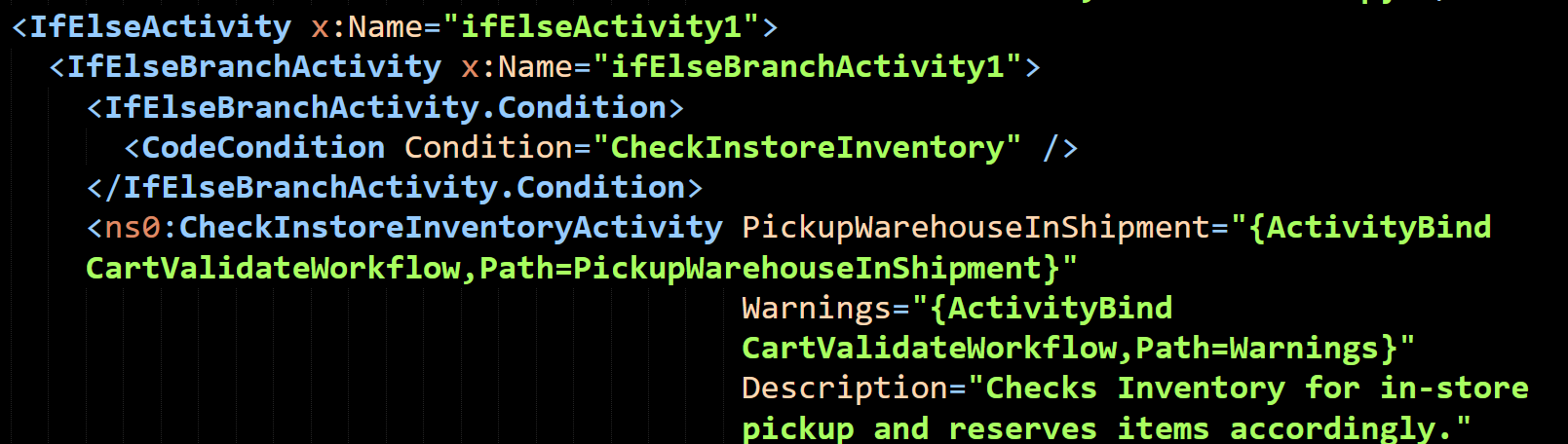

Note that this is explicitly distinct from being able to store the data in source control. Being able to actually diff in a meaningful fashion two versions of such a process is anything but trivial. Usually you are left with diffing the raw XML / JSON that store the structure. Good luck with that.

If the workflow is complex, you need to be able to understand what is going on under various conditions.

So you need a debugger.

In fact, pretty soon you’ll realize that you’ll need quite a lot of the things that developers do. Except that your tool of choice doesn’t do that, or if they do, they do it poorly.

Another issue that even if you somehow managed to bypass all of those details, you are going to be facing the same drop that you see elsewhere with tools that attempt to get rid of developers. At some point, the complexity grows too large, and you’ll call your development team and hand of the system to them. At which point they will be stack with a very clucky tool that attempt to be quite clever and easy to use. It is also horribly limiting for a developer. Mostly because all of the “complexity” involved is in the business process itself, not in the actual complexity of what is going on.

There are better ways of handling that, and the easier among them is to just use code. That can be… surprisingly versatile.

One of the primary reasons why businesses chose to use workflow engines is that they get pretty pictures that explain what is going on and look like they are easy to deal with. The truth is anything but that, but pretty sell.

One of the primary reasons why businesses chose to use workflow engines is that they get pretty pictures that explain what is going on and look like they are easy to deal with. The truth is anything but that, but pretty sell.