Transport level security, also known as TLS or SSL, is a way to secure a connection from prying eyes. This is done using math, so we know that this is good. I can also count to 20 if I take my shoes off, so you know you can trust me on that.

On very slightly more serious mode, SSL/TLS gives us one half of the security requirements. We can negotiate a secure chiper and a key and rest assured that no outside source can listen to what we are saying to the other side.

Notice that I said one half? The other half is knowing who is on the other side. This is usually done using certificates, which provide the public / private keys for the connection, and the signer of the certificate is what provides the identity of the remote connection. In other words, when I’m using SSL/TLS, I need to also know who am I going to be talking to, and then verify in some manner using the certificate that they provide me that they are indeed who they are.

Let us deconstruct the simplest of operations, GET https://my-awesome-service:

- First, we need to find the IP of my-awesome-service.

- Then, we negotiate an secured connection with this IP.

- Profit?

This would seem like the end of things, but we need to dig a bit deeper. I’m contacting my-awesome-service, but before I can do that, I need to first check what IP maps to that name. To do that I need to do a DNS query. DNS is usually unsecured, so anyone can see what kind of host names you are asking for. What is more interesting, there is absolutely nothing that prevent a Bad Guy from spoofing DNS responses to you. In fact, this has been a very fruitful area of attacks.

There is DNS Sec, which will protect you from forged requests in the last mile, but less than 15% of the world wide record are actually signed using DNS Sec, so you can usually assume that you won’t be using that. In fact, even if the domain is signed, because so many domains aren’t, most systems will be configured to assume that an unsigned request is valid by default, instead of the other way around. This make things fun at security circles, I’m sure. But for our purposes, you should know that DNS is great, but you probably shouldn’t rely on it. Errors, mistakes and outright forgery is possible.

If you want to see a simple example, head over to “/etc/hosts” on Linux or “%windir%/system32/drivers/etc/hosts” on Windows and add some fake entries there. You can have fun with pointing stackoverflow.com to lmgtfy.com, for example.

You can do it like so:

54.243.173.79 stackoverflow.com

With 54.243.173.79 being the IP address of lmgtfy.com. Once you have done that, requests that you think are going to stackoverflow.com will be sent to lmgtfy.com, with hilarity soon to follow.

Oh, except that this won’t work. StackOverflow is using HTTPS, and they are also using HSTS (HTTP Strict Transport Security). Basically, this means that once you have visited StackOverflow even once, your browser will remember that this domains require HTTPS to work, and will outright refuse to access the site without it.

But what is the problem? HSTS is great, but it just require HTTPS. So if I managed to spoof your DNS somehow (if I could modify the hosts file, I’m already admin and own the box, but assuming that I haven’t gotten there), all I would really need to do is to make sure that the websites I spoof give you a certificate. But here the second half of SSL come into play. The client making the request is going to validate that the hostname it provide is located in the certificate that the server provided. So far, that make sense. But the server could just generate whatever certificate it wants, no?

In order to prevent that, there is a chain of trust. Basically, you need to have a list of trusted root certificates that your trust, and you verify that the certificate that you got from the remote server was directly (or indirectly, in some cases) signed by them, presumably after some level of verification. Reading the actual list of trusted roots is interesting.

The Mozilla list has about 160 root certificates and includes such entities as the Government of Turkey, where all journalists will tell you that the government is free & fair (all those who would say otherwise are not there). On my Windows machine, there are about 50 root certificates, and at least at one point that included Equifax, who we know can be trusted. On a work machine, you can be fairly certain that there are additional root certificates (from the domain, for example). But for now, we’ll ignore the possibility of a bad trusted root certificate and assume that the system is working as it is meant to be. And to be fair, any violations are punished by revocation of the root certificate. This is the current state with the Equifax root certificate on my machine, for example, it has been revoked.

Another mitigation here is that there is an ongoing process to encourage certificate issuance transparency. That means that a domain can specify which CA are allowed to issue certificates for it (called key pinning). Of course, this is distributed via DNS, and we already seen that this ain’t too hot either, but it is a matter of defense in depth. Key pinning also create some fun ransomware options. If I can get control over your DNS records in some manner, including by spoofing them, I can set key pinning to a key that only I have, resulting in large number of users unable to access you site because it is not using the “correct” key. But I’m digressing. There is also the notion that a browser can do something called OCSP (online certificate status protocol), which basically states that a user can query the CA for whatever the certificate is valid. The catch is that if the CA doesn’t answer (vs. answer that the cert is invalid), the certificate is assume to be valid. This is done because a CA going down may then take down significant parts of the internet, leaving aside such concerns as the latency issues that this would require.

If you think the notion of a rouge trusted root is fantasy, there have been multiple cases of false certificates (DigiNotar, Symantec, TrustWave, etc), each with hundreds of certificates being issues (or even blank checks certificates, which can be used to generate any certificate you wish for). To combat that, there is now an effort to implement Certificate Transparency. Basically, in order to trust a certificate, it must show up in a public list. That allow admins to check that no one issued certificates for their domains.

This post has gotten quite long, so I’ll leave you with this worrisome ending and continue talking about how this applies to distributed systems in the next post.

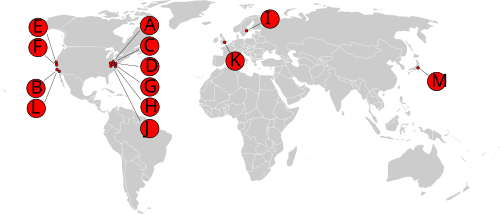

DNS is used to resolve a hostname to an IP. This is something that most developers already know. What is not widely known, or at least not talked so much is the structure of the DNS network. To the right you can find the the map of root servers, at least in a historical point of view, but I’ll get to it.

DNS is used to resolve a hostname to an IP. This is something that most developers already know. What is not widely known, or at least not talked so much is the structure of the DNS network. To the right you can find the the map of root servers, at least in a historical point of view, but I’ll get to it.

This stuff is complex, but usually, you don’t need to dive that deeply into this. At certain point, you are going to call to the network engineers and let them figure it out. We are focused on the developer aspect of understanding distributed systems.

This stuff is complex, but usually, you don’t need to dive that deeply into this. At certain point, you are going to call to the network engineers and let them figure it out. We are focused on the developer aspect of understanding distributed systems.