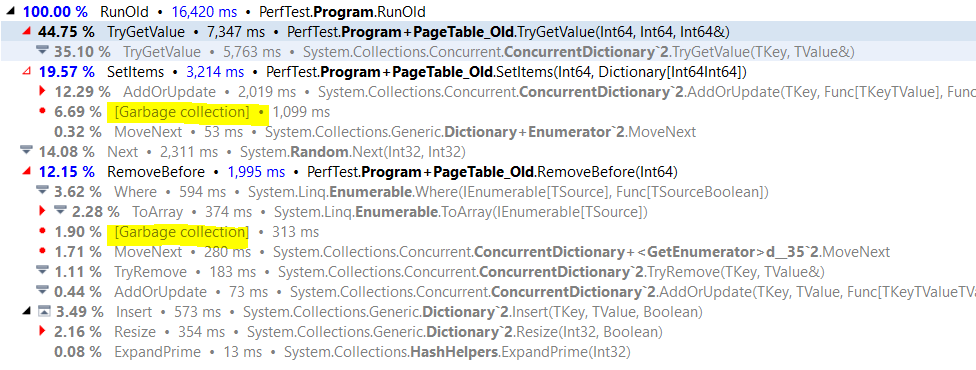

In my previous post I showed a performance conundrum. A code that has been optimized to reduced heavy allocation usage that became over twice as slow.

In particular, we had a problem here, the new code it 3.4 times slower than the new one, but how?

Now, the real scenario we had involved concurrent access, so it was much harder to figure out, but I cheated a bit when producing this image, I used sampling profiling, instead of tracing one. The major difference between the two is that tracing profiler will also give you the number of calls. This is called out as something that you would typically do because you want to analyze algorithmic complexity, but I find it incredibly useful to figure out what my code is actually doing.

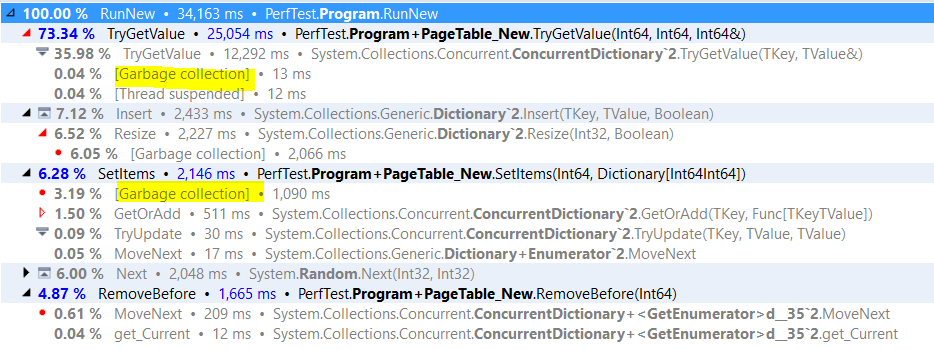

And indeed, looking at the same code using tracing profiler gives us the following two calls:

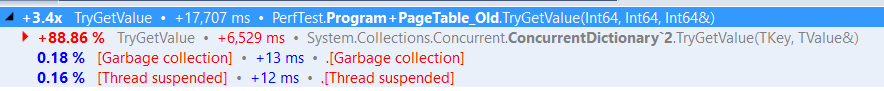

And when looking at the diffs between those two, we have:

So for some reason we are making 54 million more calls to the Equals method in the optimized version, but why? Both of those are using the exact same dictionary, using the exact same key type and the same keys, even.

In the real scenario we were facing, that wasn’t the case, so that made it hard to analyze the issue. We started looking into whatever we were doing some sort of cache poisoning by having the buffer holder as the dictionary value, instead of the array directly, but that didn’t pan out. We kept circling around the number of Equals calls. Note that the number of calls to TryGetValue is the same, as well as the number of calls to GetHashCode. So what is the diff?

The diff, quite simple, is not here at all.

The problem is in the RemoveBefore method. In the old version, if we removed all the entries, we’ll remove it completely from the dictionary. In the new version, we’ll reset the buffer so it can be used again next time. The problem with that approach is that it means that the dictionary is pretty big, much bigger than it would be in the case of the old version of the code. And that means that we’ll need to find the value (which is empty), then check its content. On the old version, we’ll just do a GetHashCode, then find that the table entry is over, and exit.

Indeed, all we had to do was change RemoveBefore to look like this:

And that gives us:

- 14.0 seconds & 1.1 GB of memory for old version

- 12.8 seconds & 0.4 GB of memory for new version

Which is pretty good result overall. It gets better when you break it apart to its component parts.

This is actually surprising, since we didn’t really set out to optimize this call very much, and it is pretty much unchanged in both versions. I think that this is likely because we keep the buffers around longer, so they are more likely to be in the cache.

This shows more than double the speed we previous had, which is pretty awesome, since this code is actually called per transactions, so anything that reduces that cost is golden.

This happens during a flush, and reducing its speed is important to reducing the time we hold the write lock, so this is pretty sweet.