Note: This post was written by Federico.

Recently at CoreFX there has been a proposal to deal with the typical case of everyone writing their own hash combining logic. I really like the framework to push that kind of functionality because hashing is one of those things that unless you really know what you are doing, it can backfire pretty bad (and even if you know, you can step over the cliff too). But this post is not about the issue itself, but it showcases a few little details that happen at JIT land (and that we usually abuse, of course J). This is the issue: https://github.com/dotnet/corefx/issues/8034#issuecomment-260733285

Let me illustrate with some examples.

ValueTuple is a new type that is being introduced that is necessary for some of the new tuples functionality in C# 7. Because hashing is important, of course they implemented the ability to combine hashes. Now, let’s suppose that we take the actual code hashing code that is being used for ValueTuple and use constants to call it.

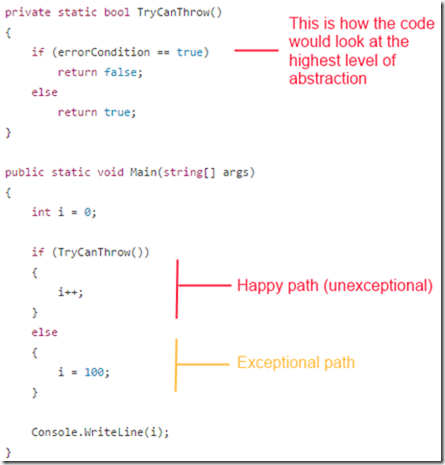

Now under an optimizing compiler chances are that there shouldn't be any difference, but in reality there is.

This is the actual machine code for ValueTuple:

So now what can be seen here? First we are creating an struct in the stack, then we are calling the actual hash code.

Now compare it with the use of HashHelper.Combine which for all purposes it could be the actual implementation of Hash.Combine

I know!!! How cool is that???

But let’s not stop there... let’s use actual parameters:

We use random values here to force the JIT to treat them as parameters and not being able to eliminate the code and convert it yet again into a constant.

The good thing, this is extremely stable. But let’s compare it with the alternative:

The question that you may be asking yourselves is: Does that scale?. So, let’s go overboard...

And the result is pretty illustrative:

The takeaway of the analysis is simple: even if the holding type is a struct, it doesn't mean it is free :)

Many of the reasons focus on the readability of the code, but remember, my work (usually) revolves around writing pretty disgusting albeit efficient code. So even though I care about readability it is mostly achieved through very lengthy comments on the code on why you shouldn't touch something if you cannot prove something will be faster.

Many of the reasons focus on the readability of the code, but remember, my work (usually) revolves around writing pretty disgusting albeit efficient code. So even though I care about readability it is mostly achieved through very lengthy comments on the code on why you shouldn't touch something if you cannot prove something will be faster.