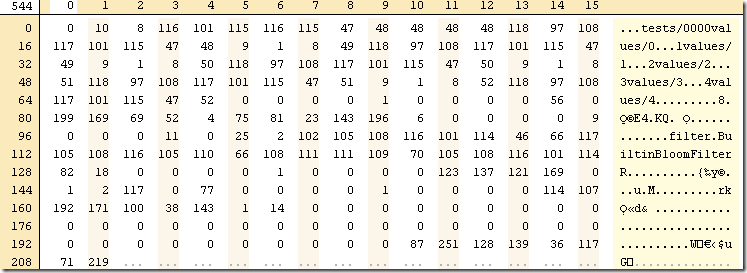

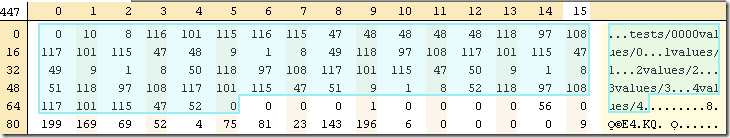

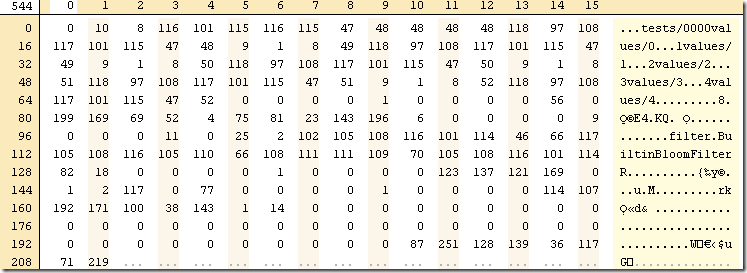

This is an example of an SST that stores:

- tests/0000 –> values/0

- tests/0001 –> values/1

- tests/0002 –> values/2

- tests/0003 –> values/3

- tests/0004 –> values/4

As well as a bloom filter for rapid checks.

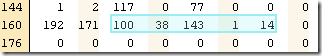

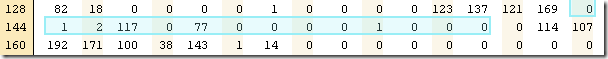

If you are wondering about the binary format, that is what this post is all about. We actually start from the end. We have the last 48 bytes of the file are dedicated to the footer.

The footer format is:

- Last 8 bytes of the file is a magic number: 0xdb4775248b80fb57ul – this means that we can quickly identify whatever this is an SST file or not.

Here is what this number looks like broken down to bytes:

- The other 40 bytes are dedicated for the metadata handle and the index handle.

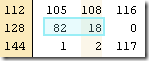

Those are two pair of longs, encoded using 7 bit encoding, in our case, here is what they look like:

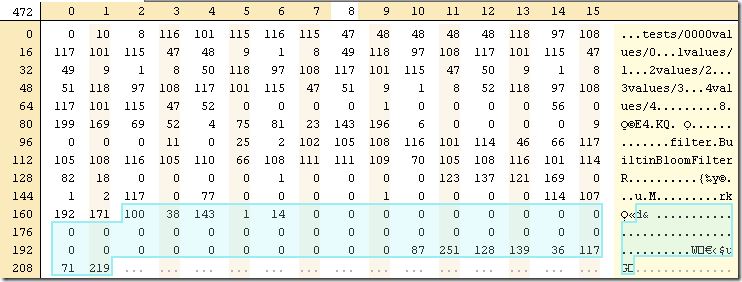

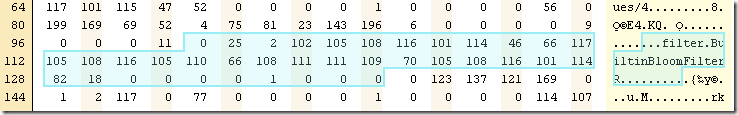

Let us see if we can parse them properly:

Note that in order to encode 143 properly, we needed two bytes, because it is higher than 127 (and we use the last bit to indicate if there are more items to read). The first two values are actually the metadata handle (position: 100, count: 38), the second are the index handle (position: 143, count: 14).

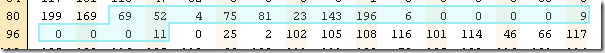

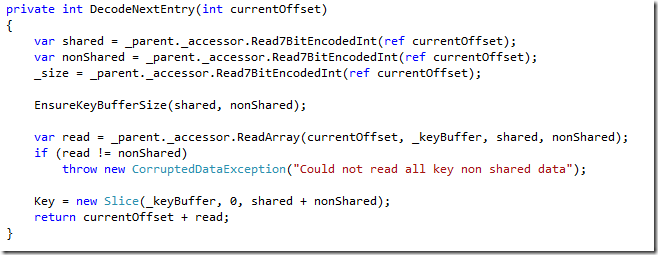

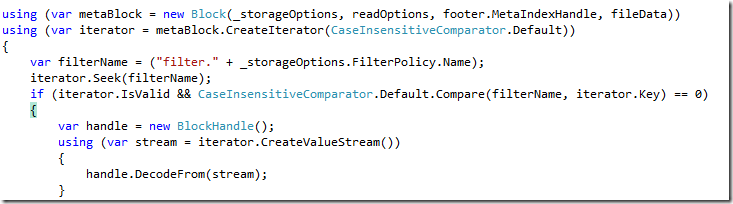

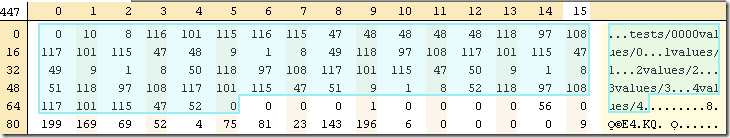

We will start by parsing the metadata block first:

You can see the relevant portions in the image.

The actual interesting bits here are the first three bytes. Here we have:

- 0 – the number of shared bytes with the previous key (there is no, which is why it is zero).

- 25 – the number of non shared bytes (in this case ,the full value, which is 25).

- 2 – the size of the value, in this case ,the value is the handle in the file of the data for the filter.

You can read the actual key name on the left ,”filter.BuiltinBloomFilter”, and then we have the actual filter data:

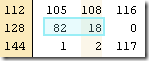

You can probably guess that this is the filter handle (position: 82, count: 18).

The rest of the data are two 4 bytes integers. Those are the restart array (position 130 –133) and the restart count (position 134 – 137). Restarts are a very important concept for reducing the size of the SST, but I’ll cover them in depth when talking about the actual data, not the metadata.

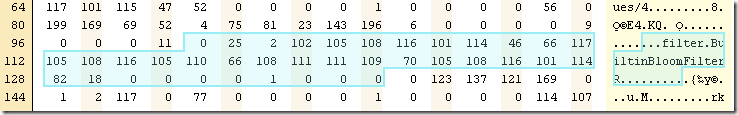

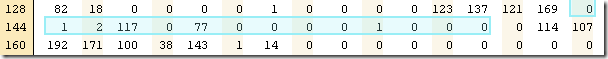

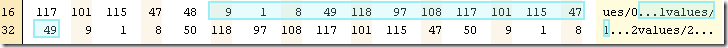

Next, we have the actual filter data itself, which looks like this:

This is actually a serialized bloom filter, which allows us to quickly decide if a specified key is here or not. There is a chance for errors, but errors can only be false positive, never false negative. This turn out to be quite useful down the road, when we have multiple SST files are need to work with them in tandem. Even so, we can break it apart into more detailed breakdown:

- The first 8 bytes are the actual serialized bloom filter bits.

- The 9th byte is the k value in the bloom filter algorithm. The default value is 6.

- The last value (11) is the lg value (also used in the bloom filter algo).

- The rest of the data is a bit more interesting. The 4 bytes preceding the 11 (those are 9,0,0,0) are the offset of valid data inside the filter data.

- The four zeros preceding that are the position of the relevant data in the file.

Honestly, you can think about this as a black box. Filter data is probably enough.

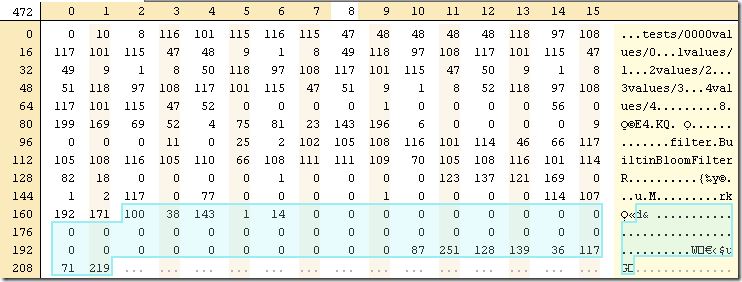

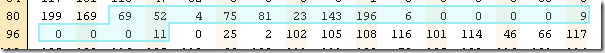

Now that we are done with the filter, we have to look at the actual index data. This is located on 143, but we know that the filter data is actually ended on 100 + 38, so why the gap? The answer is that after each block, we have a block type (compressed or not, basically) and the block CRC, which is used to determine if the file has been corrupted.

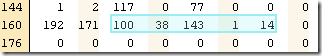

Back to the index block, is tarts at 143 and goes for 14 bytes, looking like this:

The last 4 bytes (32 bit int equal to 1) is the number of restarts that we have for this block. And the 4 bytes preceding them (32 bit int equal to 0) is the offset of the restarts in the index block.

In this case, we have just one restart, and the offset of that is 0. Hold on about restarts, I’ll get there. Now let us move to the beginning of the index block. We have the following three bytes: 0,1,2.

Just like in the meta block case, those fall under (shared, non shared, value size) and are all 7 bit encoded ints. That means that there is no shared data with the previous key (because there isn’t previous key), the non shared data is 1 and the data size is 2. If you memorized your ASCII table, 117 is lower case ‘u’. The actual value is a block handle. This time, for the actual data associated with this index. In this case, a block with position: 0 and count: 70.

Let us start analyzing this data. 0,10, 8 tells us shared, non shared, value . Indeed, the next 10 bytes spell out ‘tests/0000’ and the 8 after that are ‘values/0’. And what about the rest?

Now we have 9,1,8. Shared is 9, non shared 1 and value size is 8. We take the first 9 bytes of the previous key, giving us ‘tests/000’ and append to it the non shared data, in this case, byte with value 49 (‘1’ in ASCII), giving us a full key of ‘tests/0001’. The next 8 bytes after that spell out ‘values/1’. And the rest is pretty much just like it.

Now, I promised that I would talk about restarts. You see how we can use the shared/non shared data to effectively compress data. However, that has a major hidden cost. In order to figure out what the key is, we need to read all the previous keys.

In order to avoid this, we use the notion of restarts. Every N keys (by default, 16), we we will have a restart point and put the full key into the file. That means that we can skip ahead based on the position specified in the restart offset, and that in turn is governed by the number of restarts that we have in the index block.

And… that is pretty much it. This is the full and unvarnished binary dump of an SST. Obviously real ones are going to be more complex, but they all follow the same structure.

![]() .

.