Frans has a long post about how important is documentation for the maintainability of a project. I disagree.

Update: I have another post in this subject here.

Before we go on, I want to make sure that we have a clear understanding of what we are talking about, I am not thinking about documentation as the end user documentation, or the API documentation (in the case of reusable library), but implementation documentation about the project itself. I think that some documentation (high level architecture, coding approach, etc) are important, but the level to which Frans is taking it seems excessive to me.

The thing is though: a team of good software engineers which works like a nicely oiled machinery will very likely create proper code which is easy to understand, despite the methodology used.

So, good people, good team, good interactions. That sounds like the ideal scenario to me. I can think of at least five different ways in which a methodology can break apart and poison such a team (assigning blame, stiff hierarchy, overtime, lack of recognition, isolate responsibilities and create bottlenecks). Not really a good scenario.

The why is of up-most importancy. The reason is that because you have to make a change to a piece code, you might be tempted to refactor the code a bit to a form which was rejected earlier because for example of bad side-effects for other parts.

And then I would run the tests and they would show that this causes failure in another part, or it would be caught in QA, or I would do the responsible thing and actually get a sense of the system before I start messing with it.

If you don't know the why of a given routine, class or structure, you will sooner or later make the mistake to refactor the code so it reflects what wasn't the best option and you'll find that out the hard way, losing precious time you could have avoided.

This really has nothing to do with the subject at hand. I can do the same with brand new code, to go on in a tangent somewhere, it is something that you have to deal with in any profession.

That's why the why documentation is so important: the documented design decisions: "what were the alternatives? why were these rejected?" This is essential information for maintainability as a maintainer needs that info to properly refactor the code to a form which doesn't fall into a form which was rejected.

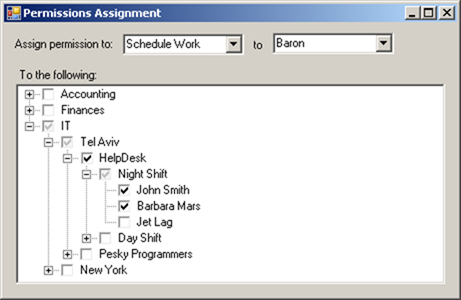

Documentation is important, yes, but I like it for the high level overview. "We use MVC with the following characteristics, points of interest in the architecture include X,Y,Z, points of interest in the code include D,B,C, etc". But I stop there, and rarely updates the documents beyond that. We have the overall spec, we have the architectural overview and the code tourist guide, but not much more. Beyond that, you are suppose to go and read the code. The build script usually get special treatment, by the way.

This also assumes that the original builders of the systems was omniscient. Why shouldn't I follow a form that was rejected? Just because the original author of an application thought that Xyz was the end-all-be-all of software, doesn't means that Brg isn't a valid approach and should be considered. It should not surprise you that I reject the idea out of hand.

Code isn't documentation, it's code. Code is the purest form of the executable functionality you have to provide as it is the form of the functionality that actually gets executed, however it's not the best form to illustrate why the functionality is constructed in the way it is constructed.

Code can be cumbersome to express the true intent with, that is why I am investing a lot of time coming up with intent revealing names and pushing all the infrastructure concerns down. The best way to illustrate why certain functionality exists in such a way is to cover it with tests, that was you can see intended usage and can follow the train of thoughts of the previous guy. I routinely head off to the unit tests of various projects to get an insight about such things can work.

I've seen technical documents which did make a lot of sense and were essential to understanding what was going on at such a level that making changes was easy.

Frans, at what level where they? Earlier you were talking about routine level, but I want to know what you think is the appropriate documentation coverage for a system?

If your project consists of say 400,000 lines of code, it's not a walk in the park to even get a slightest overview where what is located without reading all of those lines if there's no documentation which is of any value.

The problem here is that you make no assumption about the state of the code. I would take undocumented 400,000 LOC code base that has (passing) unit tests over one that had extensive documentation but little to no tests any time. The reasoning is simple, if it is testable, it is maintainable, period. Yes, it would take time to wrap my head around a system this size, but I can most certainly do it, and unit tests allows me to do a lot of things safely. Assume that you have extensive documentation, what happens when the code diverge from the Documentation?

You see, documentation isn't a separate entity of the code written: it describes in a certain DSL (i.e human readable and understandable language) what the functionality is all about; the code will do so in another DSL (e.g. C#). hats the essential part: you have to provide functionality in an executable form. Code is such a form, but it's arcane to read and understand for a human (or is your code always 100% bugfree when you've written a routine? I seriously doubt it, no-one is that good), however proper documentation which describes what the code realizes is another.

Documentation is not a DSL, and it is most certainly not understandable in many cases. Documentation can be ambiguous in the most insidious ways. The code is not another DSL, this assumes that the code and the documentation are somehow related, but the code is what actually run, so that is the authoritive on any system. Documentation can help understanding, but it doesn't replace code, and I seriously doubt that you can call it a DSL. The part that bothers me here is that the documentation is viewed as executable form, unless you are talking about something like FIT, that is not the case. I can't do documentation.VerifyMatch(code);

When I need to make a change and need to know why a routine is the way it is, I look up the design document element for that part and check why it is the way it is and which alternatives are rejected and why. After 5 years, your own code also becomes legacy code. Do you still maintain code you've written 2-3 years ago? If so, do you still know why you designed it the way it is designed and also will always avoid to re-consider alternatives you rejected back then because they wouldn't lead to the right solution?

I still maintain code that I wrote two years ago (Rhino Mocks come to mind), and I kept very little documentation about why I did some things. But I have near 100% test coverage, and the ability to verify that I still have working software. Speaking on the paid side of the fence, a system that I have started written two years ago has gone two major revisions in the mean time, and is currently being maintained by another team. I am confident in my ability to go there, sit with the code, and understand what is going on. And of course, "I have no idea why I did this bit" are fairly common, but checking the flow of the code, it is usually clear that A) I was an idiot, B) I had this and that good reason for that. Sometimes it is both at the same time.

It needs pointing out again, what was true 5 years ago is something that you really need to reconsider today.

What's missing is that a unit test isn't documenting anything

And here I flat out disagree. Unit tests are a great way to document a system in a form that keeps it current. Reading the tests for a class can give you a lot of insight about how it is supposed to be used, and what the original author thought about when he built it.

It describes the same functionality but in such a different DSL that a human isn't helped by wading through thousands and thousands of unit tests to understand what the api does and why.

Not really any different than wading through thousands of pages of documentation, which you can't even be sure to be valid.

Using unit tests for learning purposes or documentation is similar to learning how databases work, what relational theory is, what set theory is etc. by looking at a lot of SQL queries.

The only comment that I have for this: That is how I did it.

Wouldn't you agree that learning how databases work is better done by reading a book about the theory behind databases, relational theory, set theory and why SQL is a set-oriented language?

Maybe, but I believe that the best way to learn something is to use it in anger and there is absolutely nothing that can beat having something to play with and explore.