The cost of messaging

Greg Young has a post about the cost of messaging. I fully agree that the cost isn't going to be in the time that you are going to spend actually writing the message body. You are going to have a lot of those, and if you take more than a minute or two to write one, I am offering overpriced speed your typing courses.

The cost of messaging, and a very real one, comes when you need to understand the system. In a system where message exchange is the form of communication, it can be significantly harder to understand what is going on. For tightly coupled system, you can generally just follow the path of the code. But for messages?

When I publish a message, that is all I care about in the view of the current component. But in the view of the system? I sure as hell care about who is consuming it and what it is doing with it.

Usually, the very first feature in a system that I write is login a user. That is a good proof that all the systems are working.

We will ignore the UI and the actual backend for user storage for a second, let us thing about how we would deal with this issue if we had messaging in place? We have the following guidance from Udi about this exact topic. I am going to try to break it down even further.

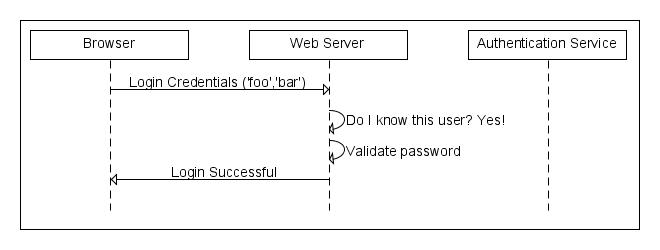

We have the following components in the process. The user, the browser (distinct from the user), the web server and the authentication service.

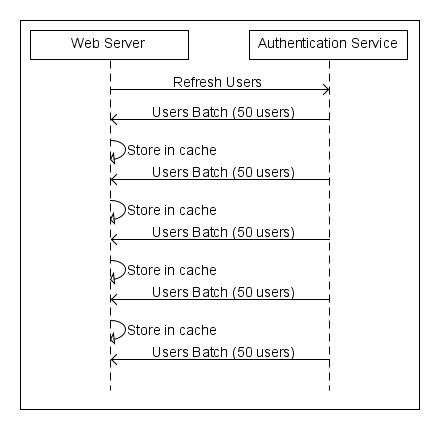

We will start looking at how this approach works by seeing how system startup works.

The web server asks the authentication service for the users. The authentication service send the web server all the users he is aware off. The web server then cache them internally. When a user try to login, we can now satisfy that request directly from our cache, without having to talk the the authentication service. This means that we have a fully local authentication story, which would be blazingly fast.

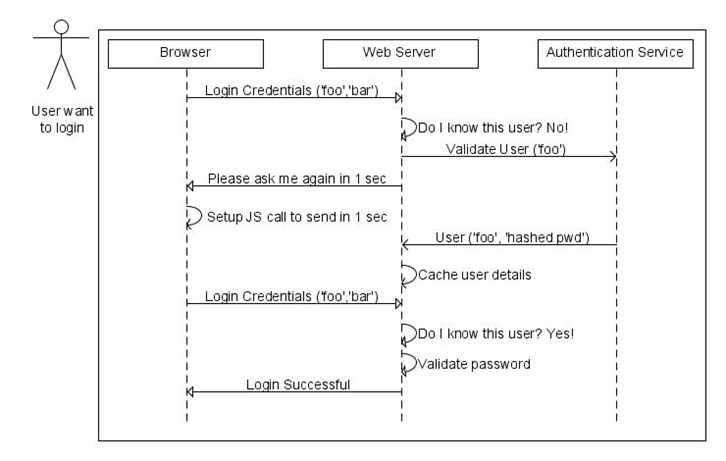

But what happens if we get a user that we don't have in the cache? (Maybe the user just registered and we weren't notified about it yet?).

We ask the authentication service whatever or not this is a valid user. But we don't wait for a reply. Instead, we send the browser the instruction to call us after a brief wait. The browser set this up using JavaScript. During that time, the authentication service respond, telling us that this is a valid user. We simply put this into the cache, the way we would handle all users updates.

Then the browser call us again (note that this is transparent to the user), and we have the information that we need, so we can successfully log them in:

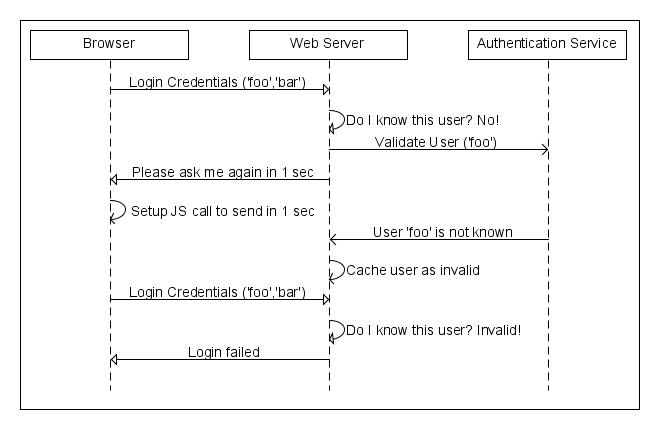

There is another scenario here, what happens if the user is not valid. The first part of the scenario is identical, we ask the authentication service to tell us if this is valid or not. When the service reply that this is not a valid user, we cache that. When the browser call back to us, we can tell it that this is not a valid user.

(Just to make things interesting, we also have to ensure that the invalid users cache will expire or has a limited size, because otherwise this is an invitation for DOS attack.)

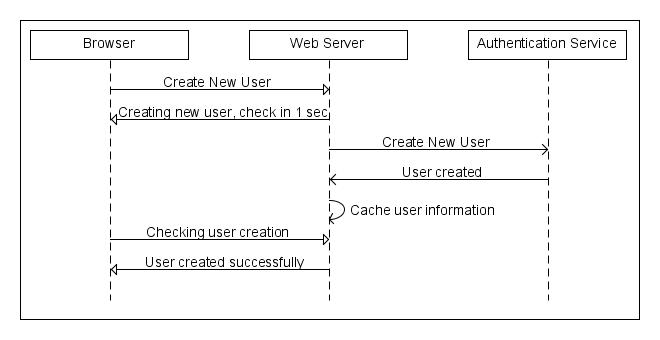

Finally, we have the process of creating a new user in the application, which work in the following fashion:

Now, I just took three pages to explain something that can be explained very easily using:

- usp_CreateUser

- usp_ValidateLogin

Backed by the ACID guarantees of the database, those two stored procedures are much simpler to reason about, explain and in general work with.

We have way more complexity to work with. And this complexity spans all layers, from the back end to the UI! My UI guys needs to know about async messaging!

Isn't this a bit extreme? Isn't this heavy weight? Isn't this utterly ridiculous?

Yes, it is, absolutely. The problem with the two SPs solution is that it would work beautifully for a simple scenario, but it creaks when start talking about the more complex ones.

Authentication is usually a heavy operation. ValidateLogin is not just doing a query. It is also recording stats, updating last login date, etc. It is also something that users will do frequently. It make sense to try to optimize that.

Once we leave the trivial solution area, we are face with a myriad of problems that the messaging solution solve. There is no chance of farm wide locks in the messaging solution, because there is never a lock taking place. There are no waiting threads in the messaging solution, because we never query anything but our own local state.

We can take the authentication service down for maintenance and the only thing that will be affected is new user registration. The entire system is more robust.

Those are the tradeoffs that we have to deal with when we get to high complexity features. It make sense to start crafting them, instead of just assembling a solution.

Just stop and think about what it would require of you to understand how logins work in the messaging system, vs. the two SP system. I don't think that anyone can argue that the messaging system is simpler to understand, and that is where the real cost is.

However, I think that you'll find that after getting used to the new approach, you'll find that it start making sense. Not only that, but it is fairly easy to see how to approach problems once you have started to get a feel for messaging.

Comments

I'm pretty cautious of the javascript thing, why not just lock at the web server and wait for a response from the authentication service before returning? are you worried about the requests locking up the web servers working threads?

Edit, also how do you manage things like.. users identity gets removed from authentication service, the local cache wouldn't reflect this until the next update, this might not always be 'ok'?

Stephen,

That introduce locking at the server level. That means that you are open to deadlocks in some scenarios.

It is much easier if you have zero locks whatsoever.

Stephen,

I publish an InvalidateUser message.

@Stephen: I believe yes, this is to prevent the locking of web server threads.

Ayende, What message would you display to the user, while he is waiting that one second?

Um,

"please wait while the system is logging you in?"

"please wait while we verify your identity?"

You can also use async web requests to do this and not have to deal w/ the javascript polling. Of course it all depends on how long it can take--if the connection would time out or not.

I would rather not deal with async programming at any level.

This is actually a much simpler programming model

And, of course, async web requests still takes server resources

Ayende,

I'm sorry, I didn't explain myself properly. Reading my comment again, I don't know what I was thinking :\

What I meant was not what would the actual message be - but what would the mechanism be. Would you use redirection or AJAX. i.e. Will the user notice a redirection on form submit, or simply a wait? And will he notice a redirection on the second request, or will the page 'magically' populate?

I think I'd go with catching the form's submit in javascript, displaying a message and causing an asynchronous request. If the "wait and try again" message is received, cause another async request after the wait, and only when login is successful, redirect to an after-the-login page.

Sadly, I haven't had the pleasure of working on scalable online systems; the only online systems I made were for closed small groups of internal users. That is why I'm always interested in your opinion as a more experienced developer - what would you do?

You're saying the javascript timeout model is more simple than an async web request? I'm not sure I agree with that...

And yea, it takes server resources... kind of. It doesn't take a thread-- which is the most important resource as connections are plentiful.

configurator,

I do it either way.

Usually with ajax form submit, but also with a redirect to a processing page.

It depends what I expect the usual latency to be.

If most requests are processed immediately, then it is ajax. If this is a lengthy process, then they go to a processing page.

It is much simpler, there is not threading whatsoever, it is just "execute this piece of code later".

In essence, this is like thread sleep, which is very easy to understand.

It also make most of the server code something like:

if (data exists in local state)

send

else

404

I think if you are going to involve javascript in the posts then I'd go with what Aaron says and do the authenticate with ajax requests - I actually think this would keep the page code much simpler and reliable..

I also think that I explained myself wrong about locking, I don't think you have a deadlock situation, and if you did this would still effect the javascript solution, effectively leaving it trying again over and over for something that won't return.

I'd prefer to stay at the server until the auth server can give me (the web server) an answer.. but I understand the problem with this hogging request handling threads..

How about if a new user registration forced a cache update ?

Typically an email is sent out to a user after registration confirming their registration.

I would gather new user registration would be far lower than login requests.

(I don't personally like the idea of hanging up a login while you wait for a cache update)

Steve,

In a distributed system, how do you force a cache update?

I think this is a great explanation of the cost of messaging and why it might be worth it anyway.

Thanks.

Ayende, is it fair to say that messaging-based systems work better for non-read-heavy applications? Or, alternatively, an application that can locally cache all or most its data?

No, it is not.

There are ways to work with that as well.

It is simply require different way of thinking about this.

Ayende,

Do you have a sample code for the above?

Kinda confused on:

"The authentication service send the web server all the users he is aware off. The web server then cache them internally."

When you say "cache them internally", is it a one time thing, I mean upon the start of the web server (IIS) or every time a browser is open and try to use the login page?

And how long would be the retention of the cached will and the next time it will refresh?

br,

ca

I haven't worked in a messaging-centric system before, so forgive my naivete.

Let's take StackOverflow as an example since we all know it. We'll assume for the sake of argument their dataset is too large to fit entirely into the server's memory...

If they were to rewrite that site to be based on a messaging system and a user navigated to a question page for a question that was not yet cached on that server, how would you suggest handling it?

I see three options here:

1) Have the page send a message requesting the question & answers. Use the JavaScript timeout you demonstrated in the article to pause for the response.

2) Send the message so that the cache is populated for the next request, but display a 404/some-other-error for the current request saying the data wasn't available.

3) Make the messaging synchronous and wait for the response in the same request thread (thus locking it).

My gut tells me solution #1 would result in a poor user experience and overly complicated the UI layer - it would also result in a significant increase in page requests.

Very few applications would say that solution #2 is acceptable, though certainly there may be some instances where you don't need the most up-to-date data (e.g.: Amazon's SimpleDb service does not guarantee each node will have the current state at all times).

Through the process of elimination #3 seems to be the best, but I am curious as to how performance would be impacted given the locking concerns.

Your thoughts?

Chris,

Per web server.

And I would use something like Rhino DHT, so it is a persistent cache.

After I wrote the previous comment - something occurred to me: in a messaging-centric application, distributed caching (DHT, Velocity, memcached) becomes much more important (thus your work on DHT). It doesn't wholly solve the issues I raised above, but it would at least lesson the frequency at which they occur.

Troy,

For something like SO, I would use a local persistent cache. It is unlikely that the data size is large enough to exceed common HD sizes for servers.

But let say that it does, and we do want to ensure good response times for the users.

At that point, I would apply the "not all problems are born equal" metric.

That is, for SO, I would say that it is likely that the most viewed questions are going to be in the cache, so we have that benefit for the common case.

For the uncommon case, I would probably make a sync call the get the data. It depends on too many factors to really answer in a good way.

Ajax is a great way of breaking apart the page, and a loading indicator can do wonders for the user's experience, so it might very well not be necessary. I would try to avoid that, if possible, but it is still an option.

Troy,

It is not quite caching, as the notion of a local state that is important.

Interesting - I think I have a better idea of how you would approach it. Thanks for taking the time to respond.

A guy who always supported ORMs now talking about SPs!!! Things change !!!

Boo,

Not really. I am trying to make a point here.

And SP has their place. CreateUser and ValidateLogin is NOT their place, but it make it easy to talk about certain things.

Ayende, when you say you can't update the web servers when a new user is added, you said:

"In a distributed system, how do you force a cache update?"

But how are you managing the authentication servers sending the 'this user is gone' messages?

You said you would publish a message from the authentication server telling subscribers the user is invalid, why would this not also be done for added users?

Look at the last diagram, that is exactly what is going on.

User Created is a published message that goes to all web servers, letting them know about the new user.

My point was that any way to inform the web servers to drop the cache was also a way to inform them about the new user

Hi Ayende,

Thank you for sharing this. I'm now working in a system where using a solution like the one you have described would be a great benefit.

Unfortunately I have a problem; my web site needs to comply with the Web Content Accessibility Guidelines AA. This roughly means no JavaScript dependency for site functionality. :(

You can do the same using simple HTML.

It isn't as slick, for sure, but it is certainly possible.

"Steve,

In a distributed system, how do you force a cache update?"

I was thinking in a pub-sub situation where 'UpdateCache' is a subscriber to the 'NewUser'

Looking for a way to avoid the 'please wait' senario.

"Look at the last diagram, that is exactly what is going on.

User Created is a published message that goes to all web servers, letting them know about the new user."

Which you answered already above :)

(I'm also interested in learning more about how Rhino DHT works)

Sorry, the diagrams are not detailed enough.

The cache is actually hidden behind a service interface that manages getting updates from the bus and updating its own local state.

@Pedro: You should make the site use JavaScript for a good User Experience; but when JavaScript is disabled, a fallback form post with a META tag that causes a refresh should be used.

Re: We have the following guidance from Udi about this exact topic.

That is one way of doing login. A highly performant and scalable way.

Not the only way - not even when using messaging.

I would argue that building an RPC based solution having similar performance and scale characteristics would be more difficult and complex.

As such, I find that you're not calling out the comparative cost of messaging which is critical for readers in making the decision whether to adopt messaging or not.

Udi,

Agreed.

I certainly think that a similar solution using RPC would be much more complex and likely more brittle.

But people aren't comparing that RPC solution to the messaging solution.

They compare CreateUser and ValidateLogin to the messaging solution.

If their requirements are such that they don't require scalability, robustness, etc - then they don't need messaging within that bounded context.

That being said, there is still value in having that bounded context raise events / publish notifications when user info is changed.

Comment preview